The

IBM

Ponder This puzzle for January 2017 asked for a rational

solution for x

y=y

x, where the last eight

digits of x are different from each other, and for a bonus asked for

a solution where the last eight digits of x are a permutation of

1,2,3,4,5,6,7,8.

It is possible to do better than this, and construct a solution

where the last nine digits of x are are a permutation of

1,2,3,4,5,6,7,8,9 , and a solution where the last ten digits of x

are are a permutation of 0,1,2,3,4,5,6,7,8,9.

Original Problem and Bonus

Goldbach and Euler proved that a set of 2 rationals {x,y} satisfies

x

y=y

x iff $

\{x,y\} = \{ (\frac{n+1}{n})^n , (\frac{n+1}{n})^{n+1} \} $

for integer n.

[1],

[2]

If these numbers are to have last digits, n must be of the form $ n

= 2^t 5^f $

$ \frac{n+1}{n}$ has $ d =

max(t,f) $ decimal places.

$ \frac{(n+1)10^d}{n} $ is an integer whose last

digits are the last decimal digits of $ \frac{n+1}{n}$

The last $D$ digits of $x,y$ are

$ (\frac{(n+1)10^d}{n})^{n+k} $ mod $ 10^D $, for

$k = 0,1 $

Which can be calculated as

$ ((n+1)2^{d-t}5^{d-f})^{n+k} $ mod $ 10^D$, for

$k = 0,1 $

or

$ (10^d +2^{d-t}5^{d-f})^{n+k} $ mod $ 10^D$, for

$k = 0,1 $

...... (1)

For modest values of n, it is easy to calculate these residues using

modular exponentiation, for example in C or Python.

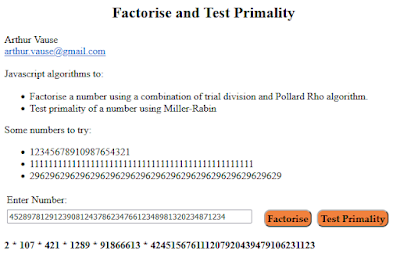

This

Python program searches for solutions to both problems.

The first problem has a solution for $n=2^4 \cdot 5^1 =80$, $x =(

\frac{81}{80})^{80} $

The bonus problem has a solution for $n= 5^5 = 3125$, $x =(

\frac{3126}{3125})^{3126} $

Extending the Problems

Now the more interesting problems: find a solution where the

last nine digits of x are are a permutation of 1,2,3,4,5,6,7,8,9 ,

and a solution where the last ten digits of x are are a permutation

of 0,1,2,3,4,5,6,7,8,9.

Here we need to examine larger values of $n$, so expression (1) becomes impractical to use and we have to find a better way of determining the last digits of $ (\frac{(n+1)10^d}{n})^{n+k} $

If $d \ge D$, then (1) can be reduced to

$ (2^{d-t}5^{d-f})^{n+k} $ mod $ 10^D$, for $k =

0,1 $

There are two cases to consider:

$f>t$

$ (2^{d-t}5^{d-f})^{n+k} $ mod $ 10^D = (

2^{f-t})^{n+k} $ mod $ 10^D = 2^{(f-t)(n+k)} $ mod $ 10^D $

Now [3]

shows that powers of 2 mod $10^D$ cycle with a period of $P = 4

\cdot 5^{D-1}$, starting at $2^D$

so if $ (f-t)(n+k) = (f-t)(2^t 5^f+k) \ge D $,

$2^{(f-t)(n+k)} $ mod $ 10^D $ = $ 2^{ D + ((f-t)(2^t 5^f+k) - D) \: mod \: P } $ mod $ 10^D

$

..... (2)

$t >f$

$ (2^{d-t}5^{d-f})^{n+k} $ mod $ 10^D = ( 5^{t-f})^{n+k}

$ mod $ 10^D = 5^{(t-f)(n+k)} $ mod $ 10^D $

[4]

shows that powers of 5 mod $10^D$ cycle with a period of

$P=2^{D-2}$, starting at $5^D$

so if $ (t-f)(n+k) = (t-f)(2^t 5^f+k) \ge D $,

$5^{(t-f)(n+k)} $ mod $ 10^D $ = $ 5^{ D + ((t-f)(2^t 5^f+k) - D) \: mod \: P } $ mod $

10^D $

..... (3)

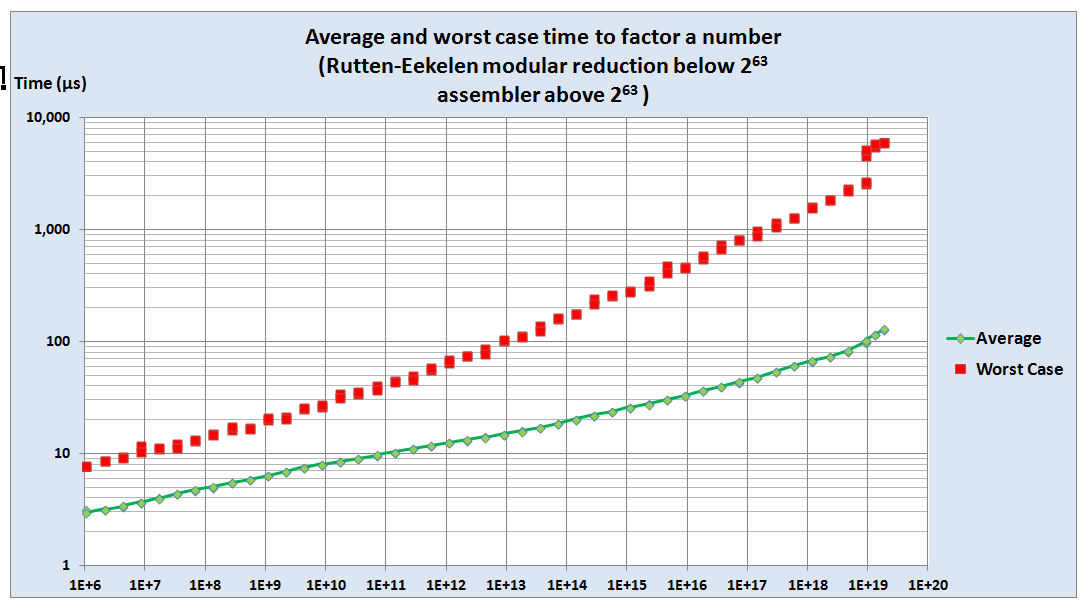

The residues in (2) and (3) can be calculated using modular

exponentiation without the need to handle excessively large numbers.

This

Python program searches for solutions to both problems, finding the following solutions:

For $n = 5^{267}$, the last ten digits of $( \frac{n+1}{n})^{n+1} $

are 3628975104

For $n = 2^2 \cdot 5^{957}$, the last nine digits of $(

\frac{n+1}{n})^{n+1} $ are 247315968

[1]

Cut

The Knot, Rational Solutions to x^y = y^x

[2] Sved, Marta. “On the Rational Solutions of x

y = y

x.”

Mathematics Magazine, vol. 63, no. 1, 1990, pp. 30–33.,

http://www.jstor.org/stable/2691508.

[3] Exploring Binary,

Cycle

Length of Powers of Two Mod Powers of Ten, Rick Regan,

November 6th, 2009

[4] Exploring Binary,

Cycle

Length of Powers of Five Mod Powers of Ten, Rick Regan,

December 22nd, 2009